Artificial intelligence (AI) is everywhere. It has found its home in our pockets, screens and workflows. Today, even the most straightforward writing tools like Grammarly have evolved into intelligent partners that do more than just spot misplaced commas.

As a content writer, I’ve watched this AI revolution with both awe and unease. The exciting thing is that AI brings new possibilities to our work. The problematic part? The rise of a new kind of skepticism — skepticism around the authenticity of content. An increasing number of voices seem to ask: Is this human-crafted, or is it AI-generated?

Since AI’s influence is growing at an unprecedented pace, these concerns are completely valid. But what happens when AI detectors — tools designed to validate content authenticity — flag content incorrectly? Can we fully rely on these AI detectors, or could they undermine the efforts and skill of the human behind the content?

Let’s unpack this together. But first thing’s first …

What are AI Detection Tools?

AI detection tools analyze text, seeking indicators that suggest machine generation. The market offers a variety of these tools, each claiming distinct capabilities. Here are a few examples:

- Content at Scale: This tool claims to detect AI-generated content from various sources, such as ChatGPT, GPT-4 and Bard, with an impressive “98% accuracy rate.” Moreover, it provides a ‘quality score’ for content. Despite these assertions, the specific parameters it evaluates to determine AI authorship remain undisclosed.

- ZeroGPT: This detector positions itself as a reliable guard against “AI and ChatGPT plagiarism.” The usage of “plagiarism” here is questionable — we’ll tap into that shortly.

- AI Classifier by OpenAI: Despite its initial promise of assigning probability scores to identify if content is AI-generated, this tool has been recently decommissioned due to low accuracy rates. This incident is a stark reminder that even the most sophisticated systems can falter, especially in a relatively unexplored domain such as AI detection.

Now, back to the topic of plagiarism. Yes, plagiarism checkers ensure content authenticity, but they do it in a fundamentally different way.

Plagiarism checkers operate by comparing content to an extensive database of existing text to identify if a piece of work has been copied from somewhere else. They don’t care whether the content was written by a human or a machine, as long as it’s original. They even go the extra mile, identifying the exact source of any duplicate content and providing a clear understanding of where and why the text seems plagiarised.

On the other hand, AI detection tools don’t perform comparison checks against an existing database. Their role is to identify the origin of creation, i.e., human or machine. The problem is, we don’t really know how they make their decisions; they don’t tell us what they’re comparing the text to or why they think it sounds like it was written by AI.

So, when ZeroGPT positions itself against “AI and ChatGPT plagiarism”, it’s a slightly muddled claim. AI-generated content, even if it matches the tone or style of a specific AI model, isn’t plagiarism unless it duplicates existing human-written text. And that’s something that these AI detectors aren’t designed to identify.

Subscribe to

The Content Marketer

Get weekly insights, advice and opinions about all things digital marketing.

Thank you for subscribing to The Content Marketer!

Testing the Reliability of AI Detectors

To investigate the reliability of AI detectors, we put them to the test with a unique experiment. We used ChatGPT, one of the most advanced AI text generators out there, and asked it to generate a response for the following prompt:

“In a world of AI-generated content, how can we differentiate the real from the AI? ChatGPT, share your insights in a fun and informative way!”

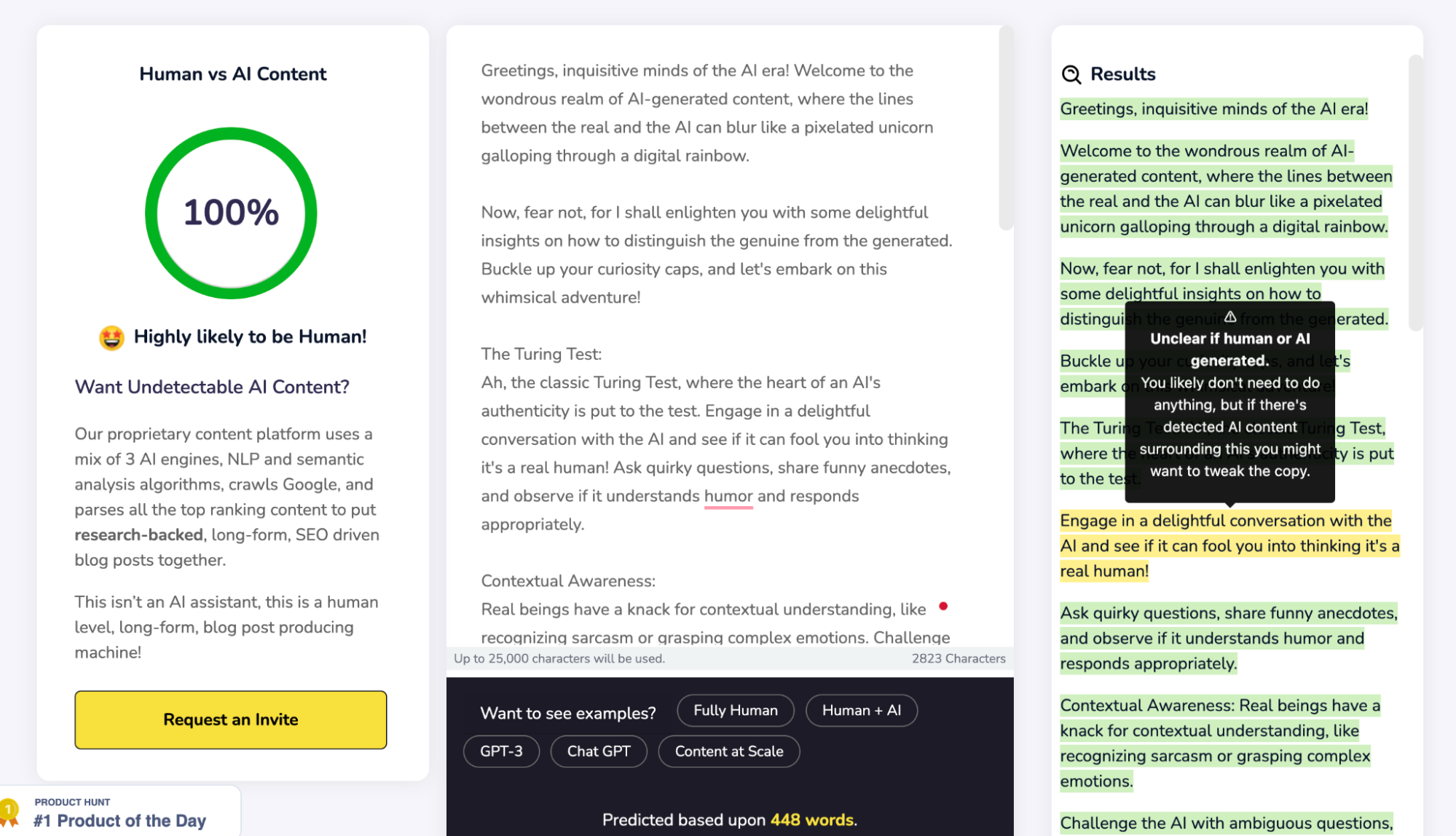

We then ran this AI-generated response through 2 separate AI detection tools — ZeroGPT and Content at Scale. Intriguingly, both tools concluded that the text was 100% human written, indicating 0% instances of AI content. However, we knew this assessment was incorrect, as we had directly used the unaltered output from ChatGPT. See below.

Content at Scale

ZeroGPT

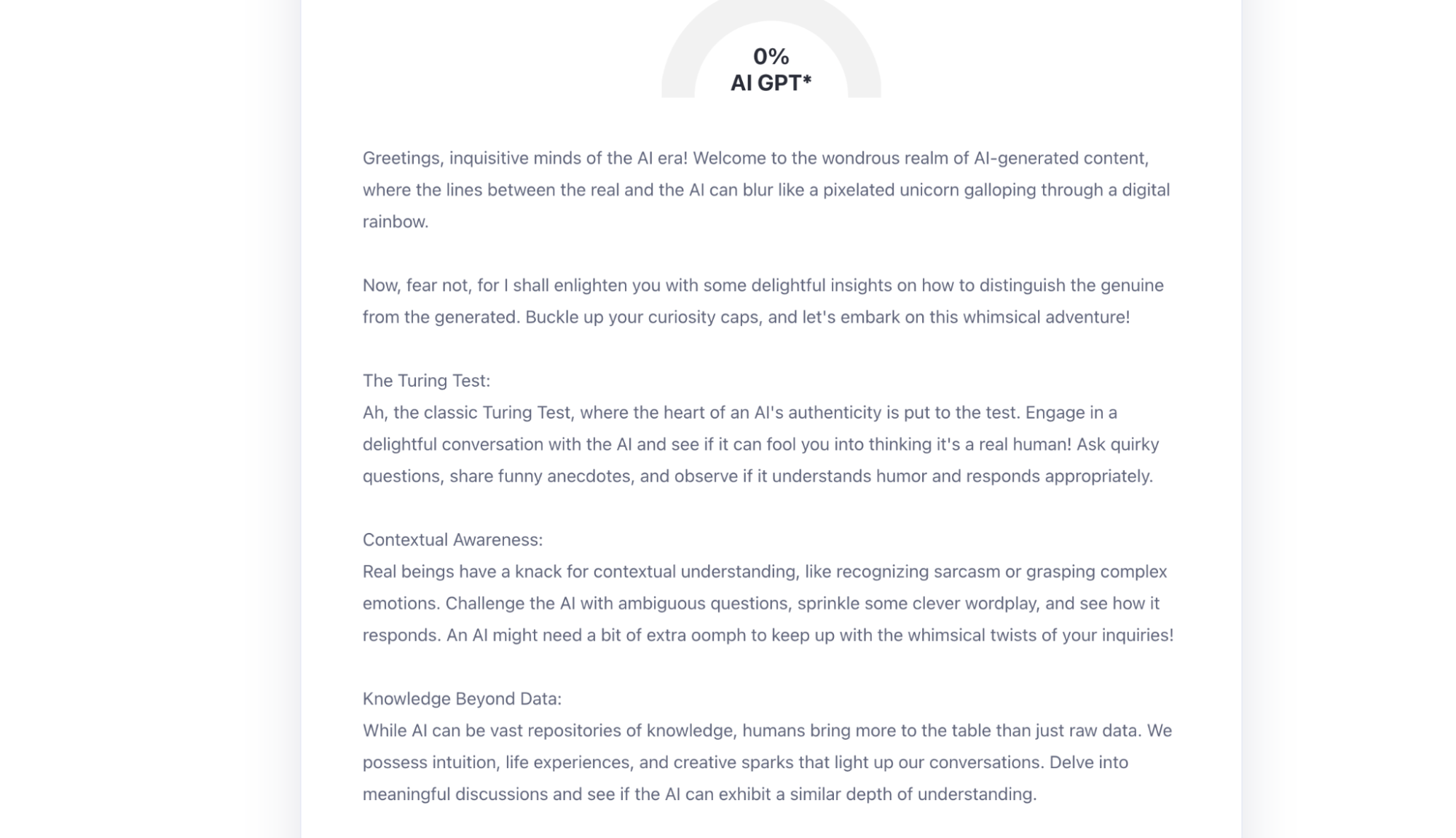

This result suggested that both ZeroGPT and Content at Scale might be using similar mechanisms to identify AI-generated content, which failed to recognize the AI origins in this case. To dive deeper into this investigation, we revisited ChatGPT and asked for another response to the same prompt, albeit with a small change: We omitted the phrase “in a fun and informative way!“

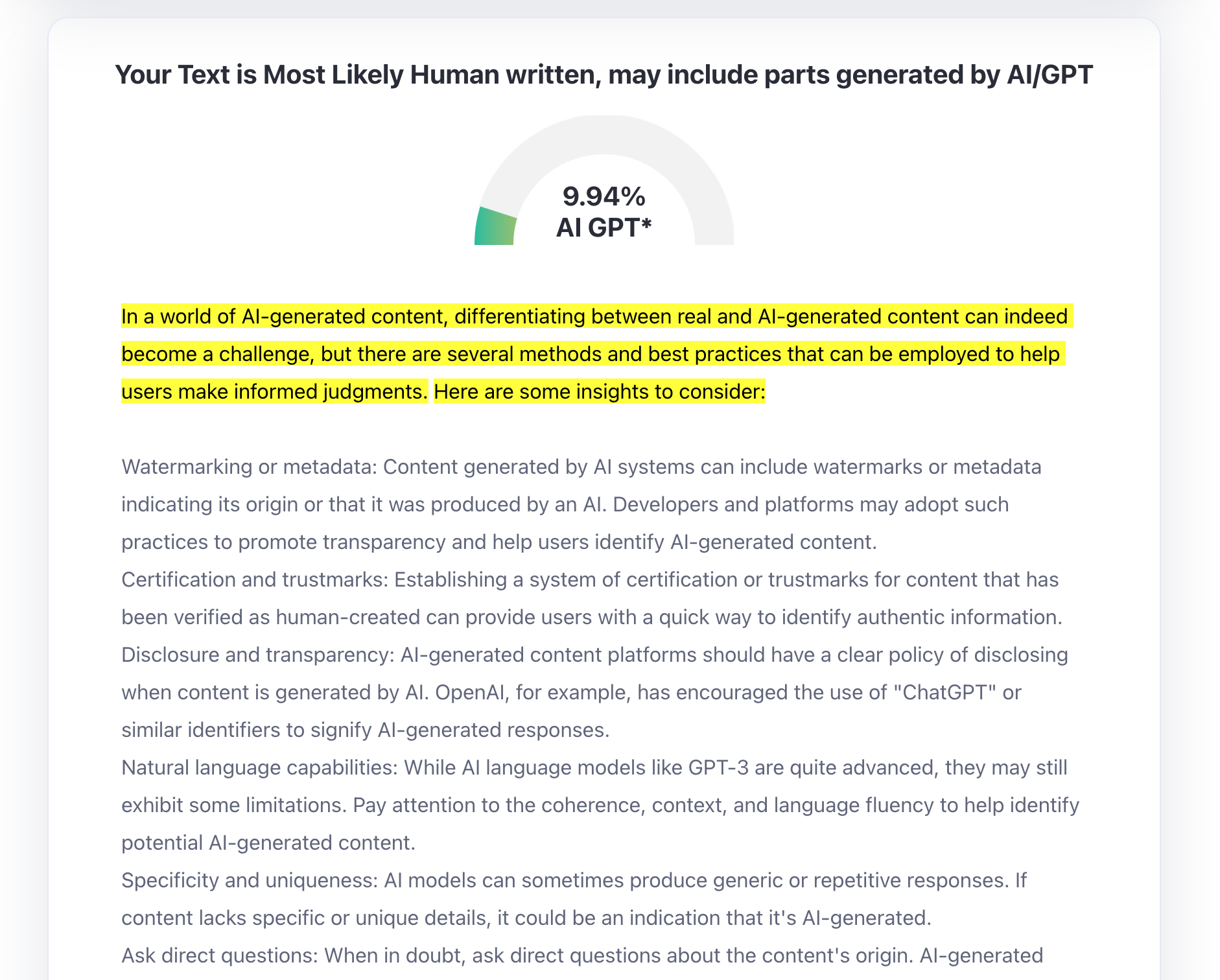

Now with a newly generated text in hand, we returned to the AI detection tools for analysis. The results were different this time, with ZeroGPT identifying approximately 10% of the content as AI-written and Content at Scale showing a result of 61% human-written content. Interestingly, both tools highlighted the same introductory paragraph as a part they considered to be AI-generated. See below.

Content at Scale

ZeroGPT

The passage in question read as follows: “In a world of AI-generated content, differentiating between real and AI-generated content can indeed become a challenge, but there are several methods and best practices that can be employed to help users make informed judgments. Here are some insights to consider:“

According to Content at Scale, this introduction came off as ‘robotic,’ but it didn’t offer further insights into why it reached this conclusion. So …

Can We Detect AI Content Manually?

Humans have unique capabilities when it comes to understanding and evaluating content. We may not have the speed and scalability of AI, but what we lack in quantity, we make up for in quality. Here’s what we can discern:

- Repetition: In its effort to generate relevant content, AI can often loop back on certain points, leading to unintended repetition. Humans are well-equipped to pick up on such nuances.

- Incorrect information: AI models generate text based on patterns and structures, but they don’t have a real-world understanding or context — which is why they can churn out text that’s factually incorrect.

- Outdated stats/figures: AI doesn’t provide sources and doesn’t have a real-time update of world events. It may, therefore, use outdated statistics or figures. ChatGPT, for instance, only has access to information up until 2021.

- Lack of analysis: While AI can generate persuasive arguments based on data fed into it, it lacks the ability to critically analyze a situation or provide a new perspective.

- Spelling errors and regional variations: AI, while highly sophisticated, can still struggle with the variations in spelling, grammar and usage that exist between different regional dialects of a language. For instance, it might not always correctly navigate the differences between American English and British English or might misinterpret regional slang, colloquialisms or idioms.

And the crucial point is: These are factors that AI detectors don’t check for.

It’s evident that human detection has distinct advantages. Google has often highlighted the importance of unique and helpful content for search engine optimization (SEO). The factors that humans can differentiate, such as relevance, accuracy and depth of analysis, directly impact SEO. In contrast, whether an AI detector flags a piece of content has no bearing on SEO.

That said, we can’t overlook the potential benefits AI detection tools might have in the future. The industry recognizes the challenges and has been actively working towards developing robust AI detection mechanisms. A promising example is the concept of an ‘AI watermark’ or a unique ‘AI alphabet‘ to indicate machine-generated content. This groundbreaking approach can revolutionize the AI detection space, allowing clear demarcation between human and AI-generated content. Until then, it’s best to approach these tools with curiosity and caution.

Final Thoughts

The world of AI is exciting, but it’s not perfect. Our tests show that even the most popular AI detection tools have their limits and can be a bit of a mystery. But that’s OK. This isn’t to knock these tools, but rather a reminder to employ them wisely and ask questions when needed.

Here at Brafton, we’re not adverse to AI. In fact, we appreciate the vast potential that these tools offer. As with any technology, our aim is to leverage their capabilities to enhance our work and services and, ultimately, benefit our clients.

We’re actively developing policies around using AI tools like ChatGPT, to ensure they augment, not replace, human creativity. We’re clear that we don’t endorse the use of unedited AI-generated copy in our work. One reason for this is that AI models learn from vast amounts of internet data, which may inadvertently lead to generated content echoing copyrighted material.

As a responsible content provider, our commitment to delivering original, meaningful pieces of work remains strong and unchanged. In the end, people are irreplaceable. We believe in the ‘human touch’ and critical thinking. AI is a powerful tool, but it’s just that — a tool. It’s us, the humans, who really make the magic happen.