A surprise spike in data? How exciting. Oh wait…

Lions and tigers and spam bots, oh my! Spambots are the bane of digital marketers’ existence, throwing false positives and negatives all over your squeaky clean data.

They’re the fleas of web analytics, popping up out of nowhere, taking a quick bite, then disappearing into the safety of the carpet. But what are these mysterious creatures?

What is a spambot?

As defined by Searchexchange, a spambot is “a program designed to collect, or harvest, email addresses from the Internet in order to build mailing lists for sending unsolicited email, also known as spam. A spambot can gather email addresses from Web sites, newsgroups, special-interest group (SIG) postings, and chat-room conversations.”

So basically, they’re parasites.

And while these spambots may be bouncing off your Department of Defense-grade site security, keeping your sensitive data safe, they’re still going to screw up your web analytics data. So let’s look at how to find those pesky bots and eliminate them.

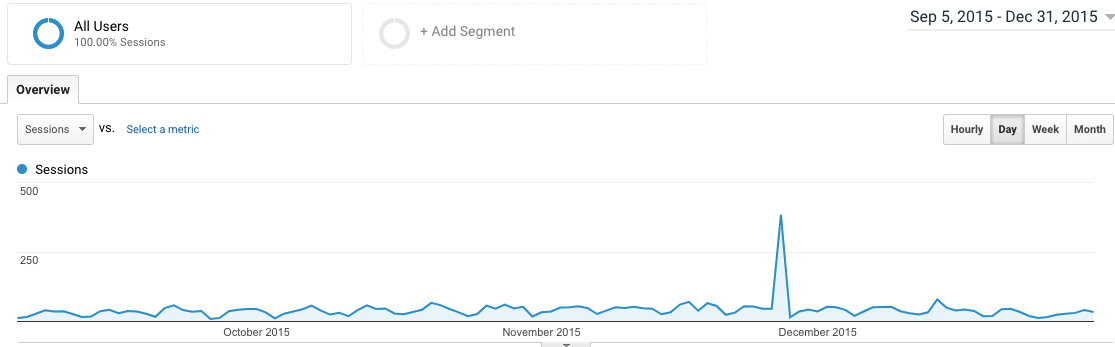

1. Identify the Spike

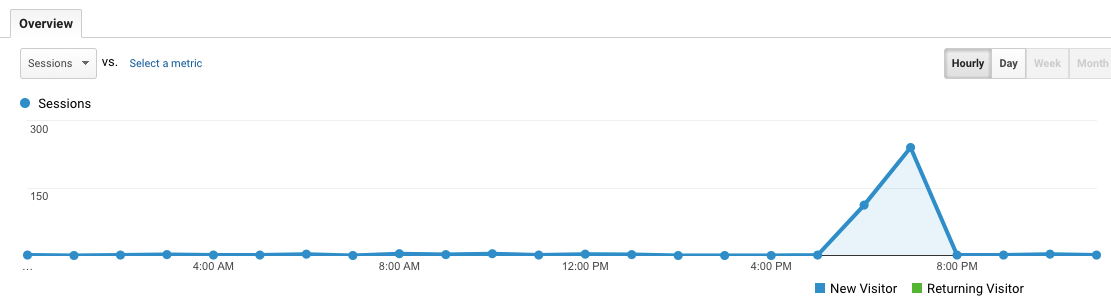

Spread out your date range in analytics and see if anything jumps out as being a little off. When you notice a random, sharp spike in traffic, withhold your enthusiasm, as it’s likely an anomaly.

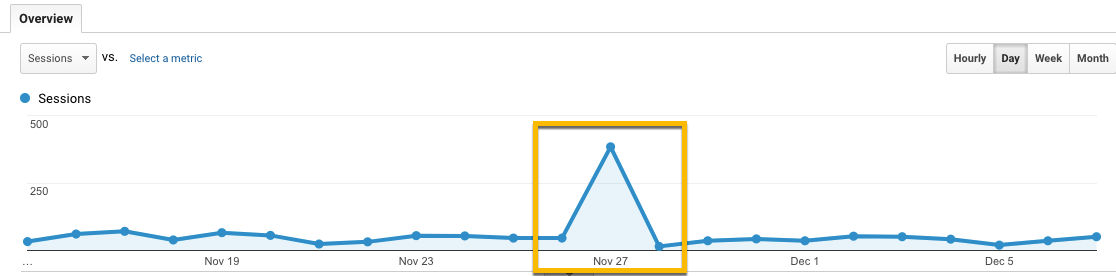

I see a bug. Do you? Let’s zoom in on the time frame to get a closer look.

Oh yeah, we have a very suspicious looking suspect on Nov. 27th. Let’s isolate that day in our date range and see what it looks like.

Hundreds of sessions between 6 and 7 p.m. Interesting…

2. Recognize Behavior

Spambots are usually deployed en masse, rather than gradually. This is their one fatal flaw. Between 6 and 7 p.m. Nov. 27th is a smoking gun. However, this is not enough evidence to convict. We need to understand a little bit more about the behavior of spambots in order to establish motive.

Typically spambots display the following characteristics:

- Originate in one geographical location.

- Come from the same IP address.

- Create a lot of sessions at once.

- High bounce rate.

- Low time on site.

- Traffic source is usually Direct or Referral.

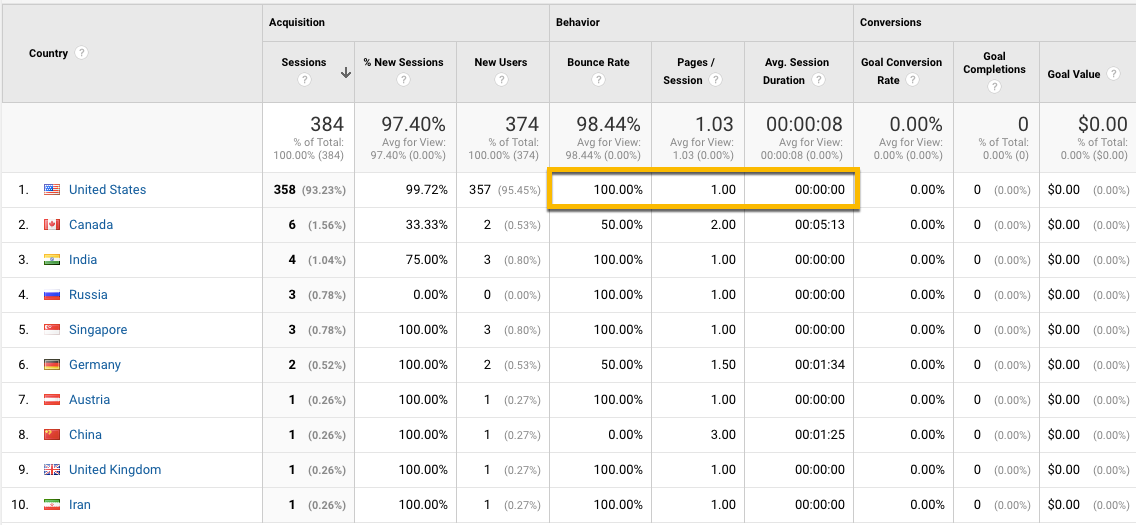

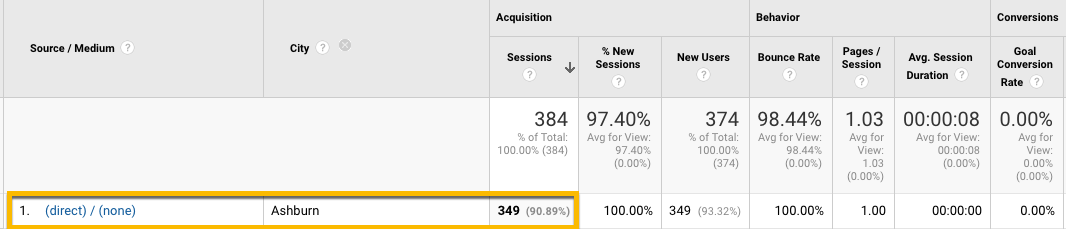

With these characteristics in mind, let’s take a look at the high-level metrics during the suspect time frame.

Because spambots typically probe only one page at a time with a unique cookie, every instance will count as a session, a bounce and zero time on site. The only reason the metrics don’t show a 0:00 average session duration, 100% bounce rate and 100% new sessions in this instance is probably because there were a couple of real visitors mixed into the dataset. Nevertheless, this is pretty solid evidence.

3. Isolate Location

The evidence is building; let’s keep digging. Next, we will look at the location of traffic and see if any anomalies arise. Go to Audience → Geo → Location.

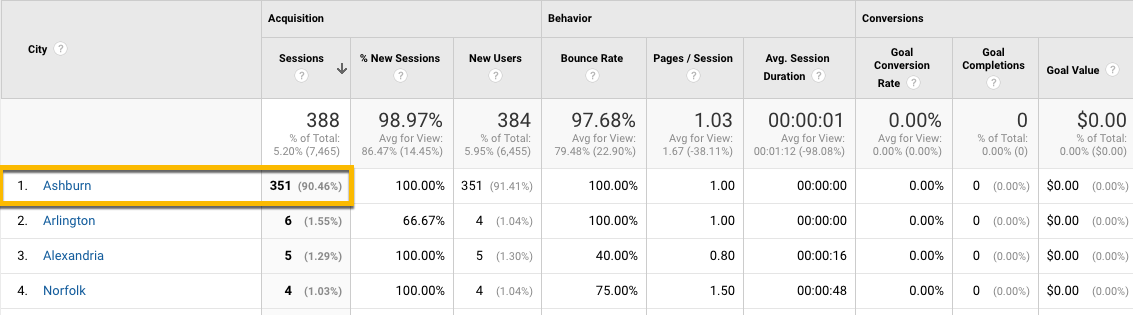

Note the unusual engagement metrics coming from U.S. locations. Dig into the United States folder to see if anything else stands out.

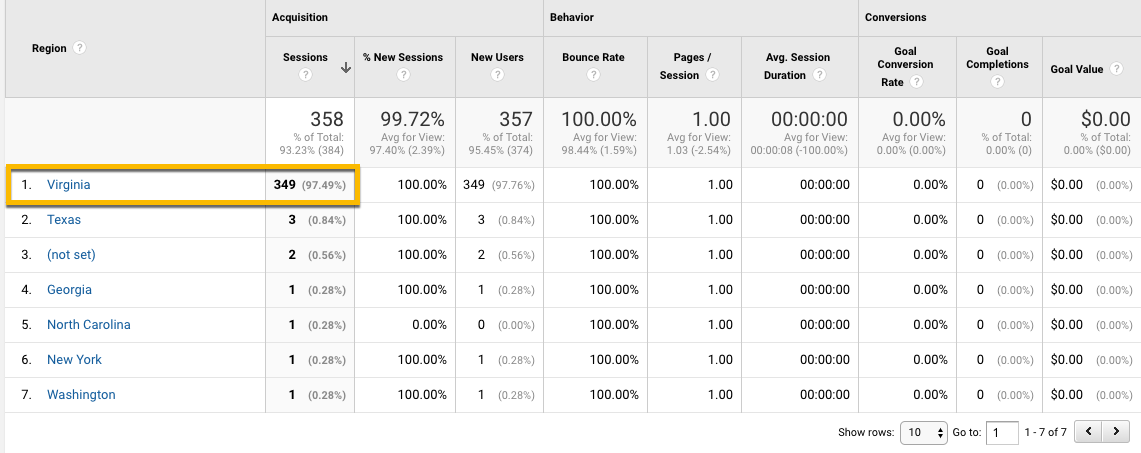

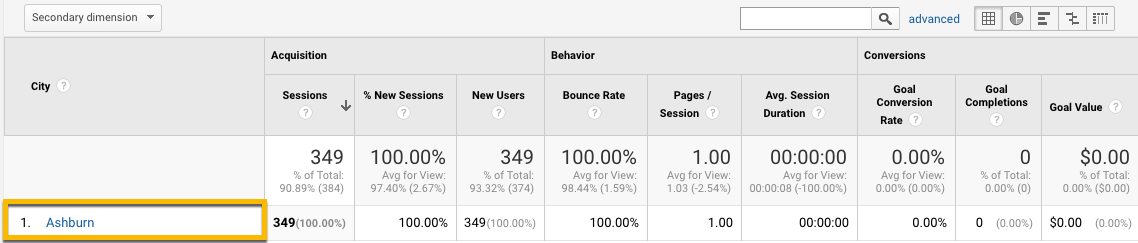

Seems unlikely that 349 unique people would have a craving for our client’s product between 7 and 8 p.m. Thanksgiving weekend. Let’s go deeper by identifying the city.

Ashburn, you sneaky little bug.

4. Identify the Source

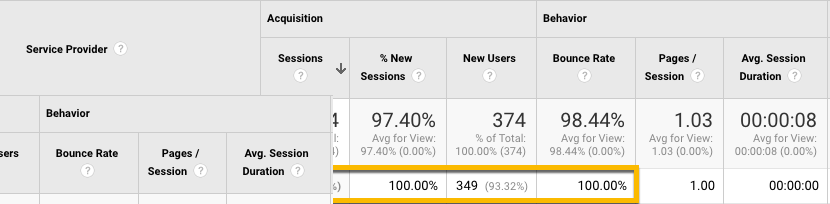

We have now established the location of the crime: Ashburn, Virginia. Now we need to know how they got to the site. If our theory holds true, all the traffic should be coming from one specific source: either Direct or Referral. Go to Acquisition → All Traffic → Source/Medium.

There’s our Ashburn traffic, coming in Direct. This case is now pretty watertight. Just to nail the final nail in the coffin, take a look at the Technology platform our spammer was using to deploy his or her bots. Go to Audience → Technology → Network.

It’s a slam dunk. We have spambots. Our detective work has discovered 349 spambot sessions created from a Hubspot platform out of Ashburn, Virginia, Nov. 27th between 7 and 8 p.m. Case. Closed.

Now we are left with the question of how to omit this bad data from our reporting.

Killing Spambots

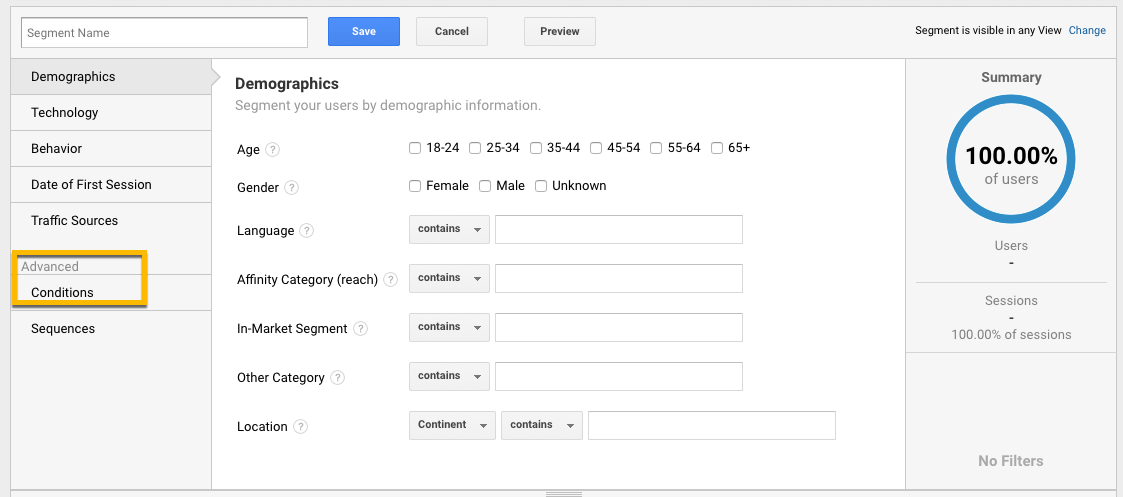

Unfortunately, in our case, the damage is already done. We cannot overwrite or delete the data that was collected in Google Analytics. What we can do is set up an Advanced Segment to omit the data from our reporting. We won’t be “killing” the spambots in this instance but rather hiding them from our reporting.

We want to decide which of the characteristics outlined above is the most unique from our clean traffic. When omitting traffic, we want to ensure we are omitting only the traffic caused by the spambots to minimize collateral damage.

In this case, I don’t want to eliminate the Hubspot service provider, as that is a popular platform that probably drives a lot of non-spam traffic. Let’s spread out the time period to six months to see how much traffic comes from Ashburn, Virginia. Perhaps we can simply eliminate traffic from this city.

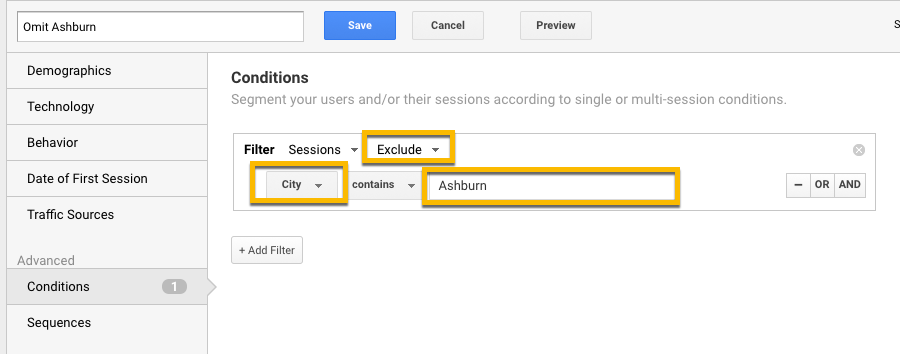

Aside from spambots, it seems very rare that we get traffic from Ashburn. I’m going to use Ashburn as our common factor to eliminate. Go to “Add Segment” at the top of the screen and click “New Segment.” Name your segment, then click on “Conditions.”

Click the filter dropdown to “Exclude,” then select “City” and type in “Ashburn.” Save.

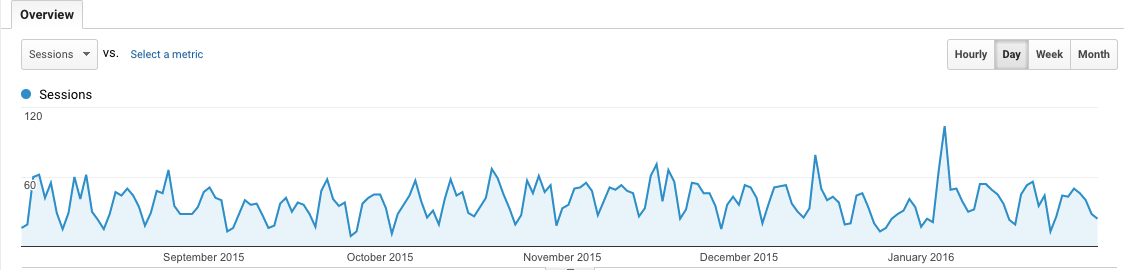

And now your reporting will omit the spam traffic. Take a look at our time period to confirm the anomaly has been removed.

Spambots, gone.

Should you want to prevent the issue from happening again in the future, you can explore setting up filters in your admin panel to completely exclude traffic from any particular source that you determined to be spam. You have the option of filtering traffic from a specific IP address, or referral source. Using filters prevents spambots from ever registering in your data — you just have to know exactly where they are coming from using the steps above.

For example, if I knew all my spambots were coming from the domain “www.spamcentral.com,” I would go to the Admin panel → Filters → Create new filter. I would then choose “exclude” and “traffic from this ISP domain” and enter “www.spamcentral.com.” This will prevent GA from ever logging traffic that comes from this domain.

Moz does a great job of explaining how to safeguard your data from spam parasites before they get in the door.

Dead bots

A good spambot is an omitted or filtered spambot. They wreak havoc on your data and send all sorts of false indicators. Identify any anomalies (either good or bad) in your metrics and follow the steps above to track down the source of the culprit to eliminate it from your data.

May you have clean data, and we will see you next week for another edition of Web Analytics Monday.